In addition to monitoring and shooting, the collection of face information can also collect a large number of published social media photos on the network. These social media photos have no privacy in front of good algorithms.

However, scholars from the University of Chicago have developed privacy protection measures for online photos, which can effectively prevent people from illegally stealing privacy.

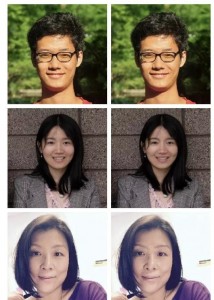

The human eye cannot distinguish the difference between the left and right pictures of the same source:

In In fact, the algorithm has quietly added minor modifications to the picture on the right. However, such modifications that cannot be seen by the naked eye can 100% but deceive Microsoft, Amazon and Kuangshi, the world’s most advanced face recognition model.

Such changes mean that photos posted on social media are captured, analyzed and stolen. This is the latest research from the University of Chicago: adding a little invisible modification to the picture can make your face “invisible”.

In this way, even if your photos on the Internet are illegally captured, the face model trained with these data can not really successfully recognize your face.

The purpose of this study is to help netizens not only share their photos, but also effectively protect their privacy.

Therefore, the “invisibility cloak” itself must also be “invisible” to avoid affecting the visual effects of the photos.

In other words, this “invisibility cloak” is actually a small pixel level modification to the photo to deceive AI’s examination.

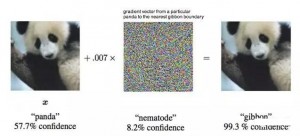

In fact, for deep neural networks, some small disturbances with specific labels can change the “cognition” of the model.

Fawkes takes advantage of this feature.

X refers to the original picture, and XT refers to another type / other face photos, φ It is the feature extractor of face recognition model.

Fawkes is designed as follows:

Step 1: select target type T T

Specify user u, and the input of Fawkes is the photo collection of user u, marked as Xu.

From a public face dataset containing many specific classification tags, K candidate target type machine images are randomly selected.

Using the feature extractor φ Calculate the center point of the characteristic space of each class k = 1… K, and record it as CK.

Then, Fawkes will select the class with the greatest difference between the feature representation center point and the feature representation of all images in Xu from the K candidate sets as the target type T.

Step 2: calculate the “invisibility cloak” of each image

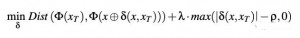

Randomly select an image in T and calculate the “invisibility cloak” for X δ( x. XT) and optimized according to the formula.

Among them| δ( x, xT)| < ρ.

The researchers used the dsim (structural dis similarity index) method. On this basis, the generation of stealth cloaks can ensure that the stealth image is highly consistent with the original image in visual effect.

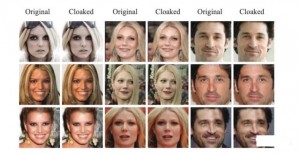

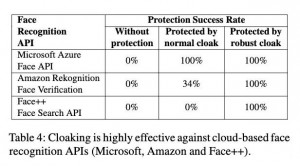

The experimental results show that no matter how tricky the face recognition model is trained, Fawkes can provide more than 95% percent effective protection rate to ensure that the user’s face is not recognized.

Even if some accidentally leaked unobstructed photos are added to the training set of face recognition models, Fawkes can provide more than 80% percent success rate of anti recognition through further extended design.

In front of the most advanced face recognition services such as Microsoft azure face API, Amazon recognition and open view face Search API, Fawkes has achieved 100% stealth effects.

At present, Fawkes is open source and can be used for Mac, windows and Linux.

In this way, we can safely put the processed face photos on the Internet.

Even if it is used by some malicious people, the stolen data is not our face data, so there is no need to worry about the leakage of privacy.

Not only that, the software can “remedy” all kinds of face data you expose on social networking sites.

For example, you used to be a surfer and used to take a lot of photos Po of your life on social networking sites–

The The photos may have been picked up by the software

never mind.

If these processed images are placed, these automatic face recognition models will want to add more training data to improve the accuracy.

At this time, wearing the “invisibility cloak” image is even “better” in AI’s view, and the original image will be discarded as an outlier.

Shan Sixiong, a Chinese student, studied for a doctorate at the University of Chicago under Professor Zhao Yanbin and Professor Heather Zheng.

As a member of the University of Chicago’s sand lab laboratory, his research mainly focuses on machine learning and secure interaction, such as how to use undetected slight data disturbances to protect users’ privacy.

Emily Wenger, the co-author of the paper, also comes from the sand lab laboratory of the University of Chicago and is studying for a doctor of CS. Her research direction is the interaction between machine learning and privacy. At present, she is studying the weaknesses, limitations and possible impact on the privacy of neural networks.

Post time: Jul-23-2021